As you probably know the basic idea behind any ML is the idea of linear regression. But what is that if not just a bunch of words?

The basic idea is very simple. You have one parameter, say the height of a person, and another correlated parameter — say weight. Usually the higher the person — the more their weight. This can be expressed as

weight= f(height)

Where f is some function. Finding f is the process of linear regression. Why linear? Because we assume that there is a linear correlation between weight and height, and it can be represented by a linear function. It might not be true in all cases, we do it for simplicity.

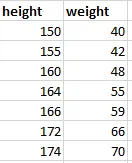

So say we have this average distribution:

To find f in this case we need to fit a linear function so that it represents the data as close as possible. Something like this:

As you may remember linear function is represented as

y = f(x), y = a*x + b

where a is the slope (angle) and b is the intercept (the point where the line crosses the axis). So to find f means to find correct a and b coefficients.

How we can understand if a set of a, b coefficients is better than another one? We use the method of least squares — get the square of distances of all points to the line and sum it. That would be the score — if it is lower than for another set, it is better. We square to make comparison easier because points can be on other sides of the line and therefore the distance can be positive/negative numbers. For example:

Now, how do we find a and b for a given set? Well, as mentioned above we need to find a line with the least sum of squares (called loss). We can use a method called gradient descent. We put the line in a random position at first and try to move it in the direction that minimizes the loss. We move it a little bit each step and see if it makes a loss smaller.

The term “regression” in “linear regression” has a historical origin. It was first used by Sir Francis Galton, a British polymath, in the context of his studies on heredity. In the late 19th century, Galton was investigating the relationship between the heights of parents and their children. He observed a phenomenon that he termed “regression towards mediocrity” (now more commonly referred to as “regression to the mean”).

Galton noticed that, although tall parents tended to have taller-than-average children, these children were generally not as tall as their parents. Similarly, the children of short parents tended to be shorter than average, but not as short as their parents. In other words, the children’s heights tended to “regress” or move towards the average height of the population.

Galton’s work was later formalized mathematically by Karl Pearson and others, who developed the method of least squares to find the best-fitting line through a set of data points. This method was used to quantify the strength and nature of the relationship between two variables, a process they termed “regression analysis.”

Over time, the term “regression” became associated with statistical techniques used to model and analyze the relationship between variables.